In today’s digital world, deepfake technology has emerged as a dangerous tool for fraud. By creating realistic but fake videos of public figures, scammers trick people into believing in false endorsements or promises. Across the country, cases of people losing their savings to such AI-driven frauds are on the rise and one such case in Bengaluru shows just how devastating the impact can be.

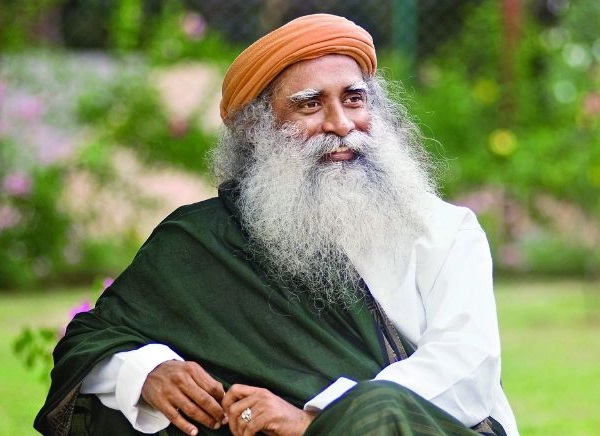

A 57 years old woman from CV Raman Nagar fell victim to a scam that cost her Rs 3.75 crore. She was misled by a deepfake video of spiritual leader Sadhguru, who appeared to endorse a stock-trading platform. Unaware of how convincingly artificial intelligence can imitate real personalities, she trusted the video. During that time, social media platforms were flooded with similar fake ads featuring Sadhguru’s voice. Concerned about this misuse, Sadhguru himself approached the Delhi High Court in June to protect his rights against unauthorised AI-generated content.

The woman’s ordeal began when she came across a reel on her phone, suggesting that investing just $250 could change her financial future. Clicking the link took her to a page where she shared personal details like her name, email and phone number. Soon after, she was contacted by individuals posing as representatives of a firm called “Mirrox”. Using names like Waleed B and Michael C, they connected with her through multiple phone numbers and added her to a WhatsApp group of about a hundred members.

To win her confidence, the fraudsters arranged Zoom sessions and taught her supposed trading techniques. Screenshots of large profits shared in the group further convinced her. Following their instructions, she transferred money to accounts provided by them and even downloaded the Mirrox app to track her investments. Between February and April, she transferred a total of Rs 3.75 crore, with the platform showing impressive returns that seemed to validate her trust.

Trouble began when she tried to withdraw her earnings. The fraudsters demanded more money for processing fees and taxes. When she refused, they cut off communication, leaving her stranded.

According to a senior police officer, the victim filed her complaint nearly five months later, making it difficult to trace the funds. Efforts are now underway to coordinate with banks to freeze the fraudsters’ accounts but recovery remains uncertain.

This case is a stark reminder of how AI-driven deepfake technology, when misused, can devastate lives, especially when it exploits the trust people place in respected public figures.